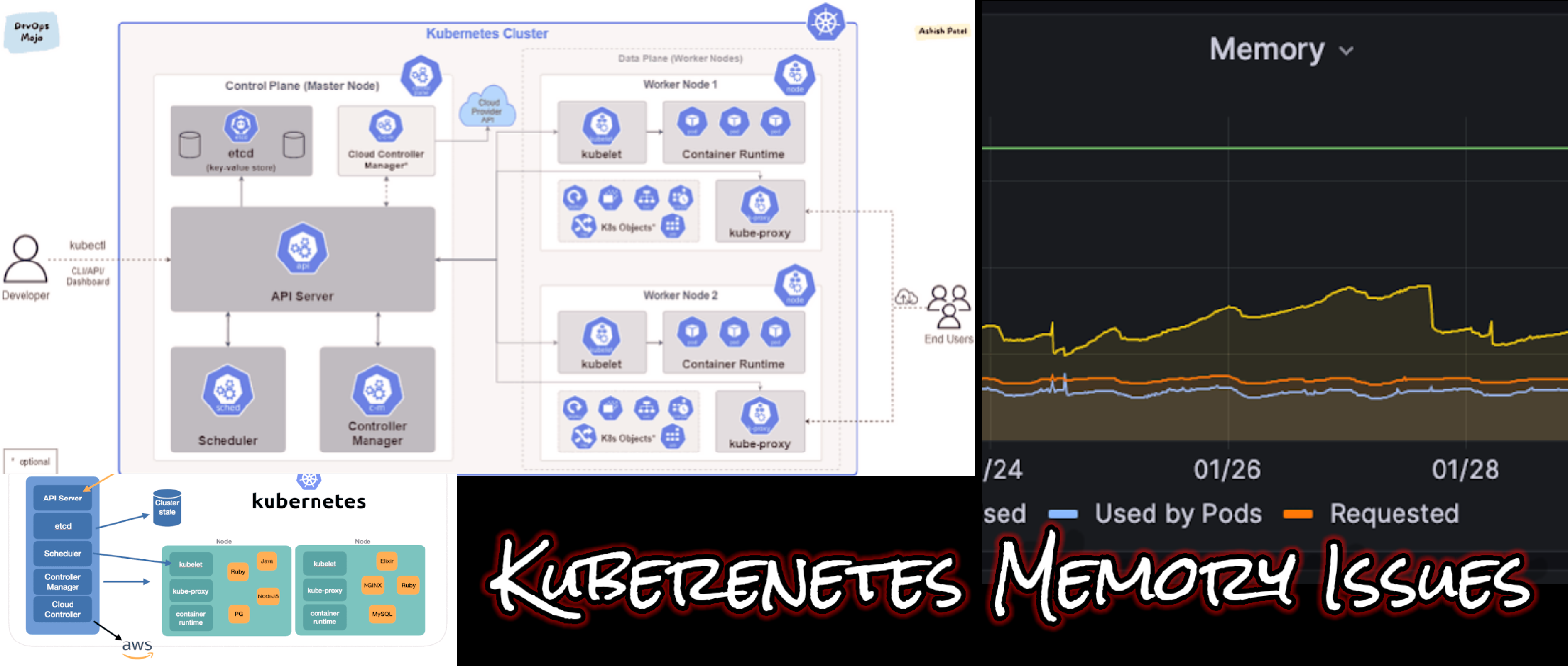

In the fast-paced realm of container

orchestration, encountering Out of Memory (OOM) issues with Pods is not uncommon. Understanding the root causes and implementing effective solutions is crucial for maintaining a resilient and efficient Kubernetes environment. In this guide, we'll delve into common OOMKilled scenarios and provide actionable steps to address each one.

### OOMKilled: Common Causes and Resolutions

#### 1. Increased Application Load

*Cause:* Memory limit reached due to heightened application load.

*Resolution:* Increase memory limits in pod specifications.

#### 2. Memory Leak

*Cause:* Memory limit reached due to a memory leak.

*Resolution:* Debug the application and resolve the memory leak.

#### 3. Node Overcommitment

*Cause:* Total pod memory exceeds node memory.

*Resolution:* Adjust memory requests/limits in container specifications.

### OOMKilled: Diagnosis and Resolution Steps

1. **Gather Information**

Save `kubectl describe pod [name]` output for reference.

2. **Check Pod Events for Exit Code 137**

Look for "Reason: OOMKilled" and "Exit Code: 137".

3. **Identify the Cause**

Determine if container limit or node overcommit caused the error.

4. **Troubleshooting**

- If due to container limit, assess if the application needs more memory.

- Increase memory limit if necessary; otherwise, debug and fix the memory leak.

- If due to node overcommit, review memory requests/limits to avoid overcommitting nodes.

- Prioritize pods for termination based on resource usage.

No comments:

Post a Comment