Tuesday 26 December 2023

Knowing When to Say Goodbye: Signs It's Time to Resign from Your Job

Sunday 24 December 2023

Navigating the Ethical Minefield: Artificial Intelligence in Job Interviews (AI Proxy)

Wednesday 20 December 2023

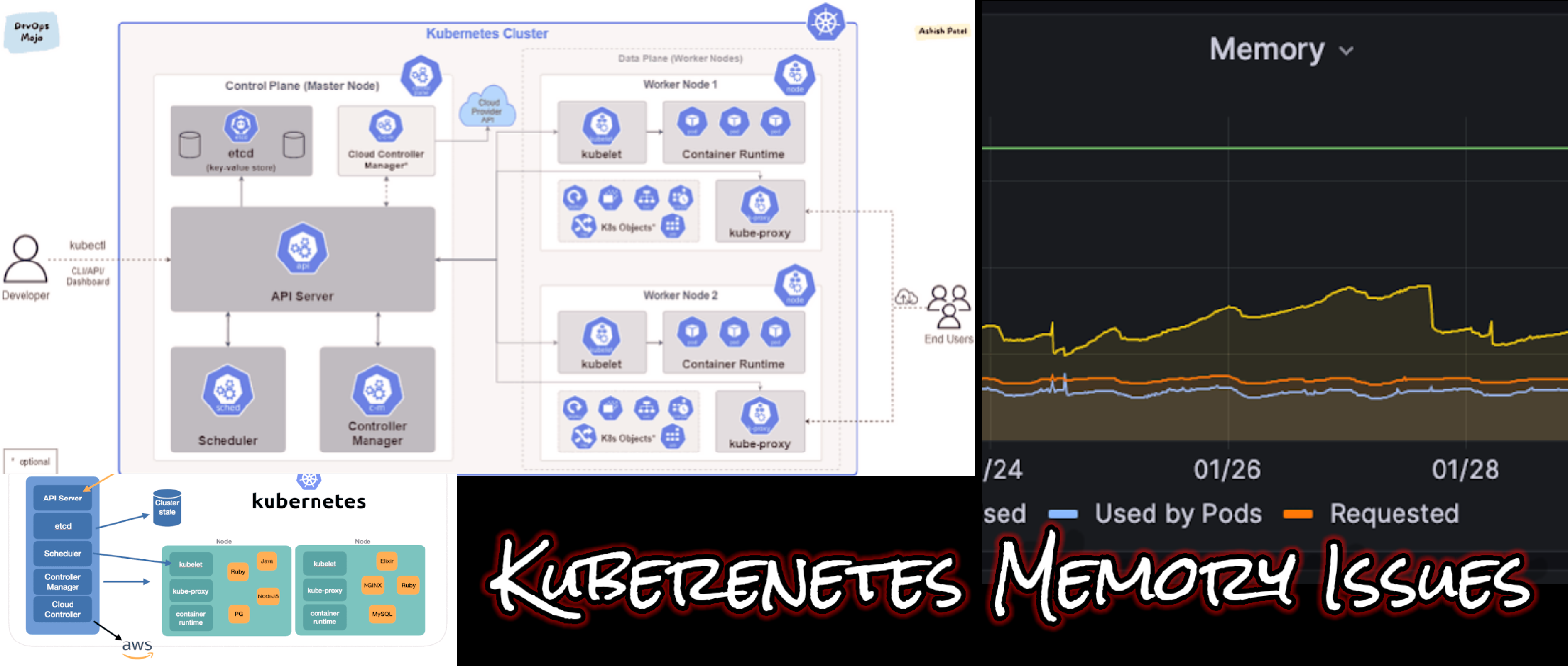

Kubernetes Memory Challenges - OOMKilled Issues

Thursday 14 December 2023

Convert JSON to AVRO using Jmeter Groovy scripting

How to encrypt login credentials in your JMeter script?

Monday 7 August 2023

Export RAW analysis data to excel in Load runner | How to export RAW data to excel in LR Analysis

Saturday 29 July 2023

correlation in Java Vuser Protocol

public class CorrelationExample {

public int init() throws Throwable {

// Perform initialization tasks

return 0;

}

public int action() throws Throwable {

// Send a request and receive the response

String response = sendRequest();

// Extract dynamic value using regular expressions

String dynamicValue = extractDynamicValue(response);

// Store the dynamic value in a parameter

lr.saveString(dynamicValue, "dynamicParam");

// Replace dynamic value with parameter in subsequent requests

String newRequest = replaceDynamicValueWithParam(response, "dynamicParam");

// Send the updated request

sendUpdatedRequest(newRequest);

return 0;

}

public String sendRequest() {

// Send the initial request and receive the response

String response = ""; // Replace with actual request code

return response;

}

public String extractDynamicValue(String response) {

// Use regular expressions or other methods to extract the dynamic value

String dynamicValue = ""; // Replace with actual extraction code

return dynamicValue;

}

public String replaceDynamicValueWithParam(String request, String paramName) {

// Replace the dynamic value in the request with the parameter

String newRequest = request.replaceAll("{{dynamicValue}}", lr.evalString(paramName));

return newRequest;

}

public void sendUpdatedRequest(String request) {

// Send the updated request

// Replace with actual code to send the request

}

public int end() throws Throwable {

// Perform cleanup tasks

return 0;

}

}

Thursday 27 July 2023

common issues and solutions while Citrix ICA Protocol

1. Citrix session initialization: If the Citrix session fails to initialize properly during replay, it may result in connection failures or an inability to access the desired applications or desktops. This can be caused by incorrect configuration settings, network connectivity issues, or issues with the Citrix server.

2. Dynamic data handling: Citrix sessions often involve dynamic data, such as session IDs or timestamps, which may vary between recording and replay. If these dynamic values are not appropriately correlated or parameterized in the script, it can lead to failures during replay. Ensure that dynamic values are captured and replaced with appropriate parameters or correlation functions.

3. Timing and synchronization issues: Timing-related problems can occur if there are delays or differences in response times between the recording and replay sessions. This can lead to synchronization issues, where the script may fail to find expected UI elements or perform actions within the expected time frame. Adjusting think times, adding synchronization points, or enhancing script logic can help address these issues.

4. Window or screen resolution changes: If the Citrix session or client machine has a different window or screen resolution during replay compared to the recording, it can cause elements or UI interactions to fail. This can be resolved by adjusting the script or ensuring that the client machine and Citrix session are configured with consistent resolution settings.

5. Application changes or updates: If the application or desktop being accessed through Citrix undergoes changes or updates between recording and replay, it can result in script failures. Elements, UI structures, or workflows may have changed, leading to script errors. Regularly validate and update the script to accommodate any changes in the application under test.

6. Network or connectivity issues: Network or connectivity problems during replay can impact the successful execution of the script. These issues can include network latency, packet loss, or firewall restrictions. Troubleshoot and address any network-related problems to ensure smooth script replay.

7. Load or performance-related issues: During load or performance testing, issues such as resource contention, high server load, or insufficient server capacity can impact the replay of Citrix scripts. These issues can result in slow response times, connection failures, or application unavailability. Monitor server and network performance, and optimize the infrastructure as needed to address these problems.

When encountering replay issues, it is essential to analyze error logs, server logs, and performance metrics to identify the root causes of failures. Reviewing the recorded script, correlating dynamic values, adjusting synchronization points, and updating the script to reflect application changes are typical troubleshooting steps to resolve replay issues. Additionally, referring to Citrix documentation, seeking support from Citrix forums or support channels, and collaborating with Citrix administrators can provide further insights and assistance in addressing specific replay issues in your Citrix WinSock protocol-based environment.

Tuesday 25 July 2023

How to insert Rendezvous point in True Client Script in Load runner

Monday 10 July 2023

How to Convert Postman Collection to Apache JMeter (JMX)?

Tuesday 4 July 2023

How to Integrate Azure Devops with Load runner?

Automated Performance Testing: Azure DevOps allows you to incorporate LoadRunner tests into your CI/CD pipelines, enabling automated performance testing as part of your development process. This ensures that performance testing is executed consistently and regularly, helping identify and address performance issues early in the development lifecycle.

Seamless Collaboration: By integrating LoadRunner with Azure DevOps, you can centralize your performance testing efforts within the same platform used for project management, version control, and continuous integration. This promotes seamless collaboration among development, testing, and operations teams, streamlining communication and enhancing efficiency.

Version Control and Traceability: Azure DevOps provides version control capabilities, allowing you to track changes to your LoadRunner test scripts and configurations. This ensures traceability and provides a historical record of test modifications, facilitating collaboration and troubleshooting.

Test Reporting and Analytics: Azure DevOps offers powerful reporting and analytics features, which can be leveraged to gain insights into performance test results. You can visualize and analyze test metrics, identify performance trends, and generate reports to share with stakeholders. This helps in making data-driven decisions and identifying areas for performance optimization.

Continuous Monitoring and Alerting: Azure DevOps integration allows you to incorporate LoadRunner tests into your continuous monitoring and alerting systems. You can set up thresholds and notifications based on performance test results, enabling proactive monitoring of performance degradation or anomalies in production environments.

Scalability and Flexibility: Azure DevOps provides a scalable and flexible infrastructure to execute LoadRunner tests. You can leverage Azure cloud resources to spin up test environments on-demand, reducing the need for maintaining dedicated infrastructure and improving resource utilization.

How to integrate Azure DevOps with LoadRunner:

1. Ensure you have an active Azure DevOps account.

2. Create a new project or select an existing project in Azure DevOps.

3. Navigate to the project settings and select the "Service connections" option.

4. Create a new service connection of type "Generic" or "Others".

5. Provide the necessary details such as the connection name, URL, and authentication method (username/password or token).

6. Save the service connection.

1. In your Azure DevOps project, go to the "Extensions" section.

2. Search for the "LoadRunner" extension and install it.

3. Follow the instructions to complete the installation.

Configure LoadRunner tasks in Azure DevOps pipeline:

1. Create a new or edit an existing Azure DevOps pipeline for your LoadRunner tests.

2. Add a new task to your pipeline.

3. Search for the "LoadRunner" task and add it to your pipeline.

4. Configure the LoadRunner task with the necessary parameters, such as the path to your LoadRunner test script, test settings, and other options.

5. Save and commit your pipeline changes.

Run the LoadRunner tests in Azure DevOps:

1. Trigger a build or release pipeline in Azure DevOps that includes the LoadRunner task.

2. Azure DevOps will execute the LoadRunner task, which will run your LoadRunner tests based on the configured parameters.

3. Monitor the test execution progress and results in Azure DevOps.

That's it, Happy Testing!

Monday 3 July 2023

How to use .pem and .key certificate files in vugen load runner scripting | How to import .pem & .Key certificates in vugen

1. Place the ".pem" and ".key" files in a location accessible to your VuGen script, such as the script folder or a shared location.

2. Open your VuGen script and navigate to the appropriate section where you need to use the certificate.

3. In the script editor, add the following lines of code to load and use the certificate files:

web_set_certificate_ex("<pem_file_path>", "<key_file_path>", "cert_password");

Replace "<pem_file_path>" with the path to your ".pem" file and "<key_file_path>" with the path to your ".key" file. If the certificate is password-protected, replace "cert_password" with the actual password.

4. Save your script.

By using the 'web_set_certificate_ex' function, you instruct VuGen to load the specified ".pem' and ".key" files and use them for SSL/TLS communication during script execution.

Make sure the certificate files are valid and properly formatted. If there are any issues with the certificate files, the script may fail to execute or encounter SSL/TLS errors.

1. Launch VuGen: Open LoadRunner VuGen and create a new script or open an existing one.

2. Recording Options: In the VuGen toolbar, click on "Recording Options" to open the recording settings dialog box.

3. Proxy Recording: In the recording settings dialog box, select the "Proxy" tab. Enable the "Enable proxy recording" option if it is not already enabled.

4. Certificate Settings: Click on the "Certificate" button in the recording settings dialog box. This will open the certificate settings dialog box.

5. Import Certificates: In the certificate settings dialog box, click on the "Import" button to import the .pem and .key certificates. Browse to the location where the certificates are stored and select the appropriate files.

6. Verify Certificates: After importing the certificates, verify that they appear in the certificate list in the settings dialog box. Make sure that the certificates are associated with the correct domains or URLs that you want to record.

7. Apply and Close: Click on the "OK" button to apply the certificate settings and close the certificate settings dialog box.

8. Save Recording Options: Back in the recording settings dialog box, click on the "OK" button to save the recording options.

9. Start Recording: Start the recording process by clicking on the "Start Recording" button in the VuGen toolbar. Make sure to set the browser's proxy settings to the address and port specified in the recording options.

10. Proceed with Recording: Perform the actions that you want to record in the web application or system under test. VuGen will capture the network traffic, including the HTTPS requests and responses.During the recording process, VuGen will use the imported .pem and .key certificates to handle SSL/TLS connections and decrypt the HTTPS traffic. This allows VuGen to record and analyze the encrypted data exchanged between the client and the server.

Wednesday 28 June 2023

What is SSL? | Secure Sockets Layer SSL

Sites can enable SSL by getting an SSL certificate. Browsers can detect this certificate, which lets them know that this connection needs to be encrypted. It is very easy for users to recognize whether they are visiting an SSL-enabled site or not by spotting a tiny lock preceding the website URL. Also, sites using SSL are identifiable via their use of the https instead of http.

Tuesday 23 May 2023

Converting Fiddler Session To load runner script | Fiddler to generate load runner vugen script

1. Capture Traffic in Fiddler: Launch Fiddler and start capturing the desired traffic by clicking the "Start Capture" button. Perform the actions you want to record, such as navigating through web pages or interacting with a web application.

2. Export Traffic Sessions: Once you have captured the necessary traffic, go to Fiddler's "File" menu and choose "Export Sessions > All Sessions" to save the captured traffic sessions to a file on your computer.

3. Convert Sessions to LoadRunner Script: Now, you need to convert the exported sessions into a LoadRunner script format. This process involves parsing the captured traffic and generating the corresponding script code. LoadRunner does not have a direct built-in feature for importing Fiddler sessions, so you'll need to use a third-party tool or script to perform the conversion.

One popular approach is to use a tool called "Fiddler2LR" developed by the LoadRunner community. It is an open-source tool that converts Fiddler sessions to LoadRunner scripts. You can find the tool and instructions on how to use it on GitHub or other community websites. Alternatively, you can write a custom script or use a programming language of your choice to parse the exported sessions and generate the LoadRunner script code manually. This approach requires more technical expertise and knowledge of LoadRunner's scripting language.

4. Adjust Script Logic: After the conversion, review the generated LoadRunner script code and make any necessary adjustments or enhancements. LoadRunner scripts often require modifications to handle dynamic values, correlations, think times, and other performance testing-related considerations.

5. Import and Enhance the Script in LoadRunner: Finally, import the generated LoadRunner script into the LoadRunner IDE or controller, where you can further enhance it by adding performance test scenarios, configuring load profiles, adjusting user profiles, and specifying other test parameters as needed.

Remember that the process of converting Fiddler traffic to a LoadRunner script may vary depending on the complexity of your application and the specific requirements of your performance test. It's crucial to have a good understanding of LoadRunner scripting and performance testing concepts to ensure an accurate and effective conversion.

Monday 22 May 2023

Call one sampler (HTTP) from another sampler (JSR223) in JMeter | Jmeter Sampler Integration

Yes, it is possible to call one sampler (HTTP) from another sampler (JSR223) in JMeter. JMeter provides flexibility to customize and control the flow of your test plan using various components, including JSR223 samplers.

Thursday 18 May 2023

IP Spoofing in Jmeter | How to do ip spoof in jmeter?

Steps to do IP Spoofing in Jmeter:

1. Open your JMeter test plan.

2. Add a "HTTP Request Defaults" configuration element by right-clicking on your Test Plan and selecting "Add > Config Element > HTTP Request Defaults."

3. In the "HTTP Request Defaults" panel, enter the target server's IP address or hostname in the "Server Name or IP" field.

4. Add a "User Defined Variables" component by right-clicking on your Thread Group and selecting "Add > Config Element > User Defined Variables."

5. In the "User Defined Variables" panel, define a variable for the IP address you want to spoof. For example, you can set a variable name like `ip_address` and assign a value like `192.168.1.100`.

6. Add a "HTTP Header Manager" component by right-clicking on your Thread Group and selecting "Add > Config Element > HTTP Header Manager."

7. In the "HTTP Header Manager" panel, click on the "Add" button and set the following fields:

- Name: `X-Forwarded-For`

- Value: `${ip_address}` (referring to the variable defined in step 5)

Now, when you send requests using JMeter, it will include the `X-Forwarded-For` header with the spoofed IP address.

Thursday 4 May 2023

Whats the use of web_set_rts_key function in load runner | Overwrite HTTP erros in load runner

Tuesday 2 May 2023

Recording Excel with Macro Enabled in LoadRunner | Record excel with load runner

Performance Testing automation in Azure Boards using JMeter

Saturday 29 April 2023

Performance Testing - Common Database issues | DB issues in Performance testing

Tuesday 25 April 2023

VUser memory foot prints load runner | Calculating Memory Requirements for Vusers in LoadRunner: Protocol-Specific Considerations

Wednesday 19 April 2023

Neotys team server cannot be reached. please check the server configuration error in neoload

- Check the server configuration: Ensure that the server configuration is correct and that the necessary services are running. You may want to consult the documentation or contact the vendor for further assistance.

- Check network connectivity: Verify that the team server is accessible from the network where you are trying to connect. Check if there are any network issues or firewalls that could be blocking the connection.

- Restart the team server: Try restarting the Neotys team server and see if this resolves the issue.

- Update the team server: If there is an available update for the team server, consider updating to the latest version as it may address the issue you are facing.

- Contact Neotys support: If the above steps do not resolve the issue, you may want to contact Neotys support for further assistance. They should be able to provide more detailed troubleshooting steps and help you resolve the issue.

Tuesday 11 April 2023

java.lang.runtime exception unable to resolve service by in working protec:unable to execute protoc binary

.jpg)

If your Jmeter script throws this error for gRPC script it could be related to the protocol buffer (protobuf) binary file not being found or not being able to execute it. To resolve this error, follow these steps:

- Make sure that protobuf is installed on your system and the binary file is available in the PATH environment variable.

- Check the JMeter log files for more information about the error, such as the exact path to the protoc binary file.

- Verify that the protoc binary file is executable by running the following command in a terminal:

- 'chmod +x path/to/protoc'

- If the protoc binary file is not available on your system, download it from the official protobuf website and add the binary file to the PATH environment variable.

- Check JMeter and gRPC versions

- Restart JMeter and try running your test plan again.

gRPC vs. REST API

- RESTful APIs and gRPC have significant differences in terms of their underlying technology, data format, and API contract requirements.

- Unlike RESTful APIs that use HTTP/1.1, gRPC is built on top of the newer and more efficient HTTP/2 protocol. This allows for faster communication and reduced latency, making gRPC ideal for high-performance applications.

- Another key difference is in the way data is serialized. gRPC uses Protocol Buffers, a binary format that is more compact and efficient compared to the text-based JSON format used by REST. This makes gRPC more suitable for applications with large payloads and high traffic volume.

- In terms of API contract requirements, gRPC is more strict than REST. The API contract must be clearly defined in the proto file, which serves as the single source of truth for the API. On the other hand, REST's API contract is often looser and more flexible, although it can be defined using OpenAPI.

Overall, both RESTful APIs and gRPC have their strengths and weaknesses, and the choice between the two will depend on the specific needs of the application.

How to create a gRPC script with Jmeter? Jmeter-gRPC protocol load testing

- Install the gRPC plugin for JMeter. You can find the plugin on the JMeter Plugins website or you can download it using the Plugin Manager in JMeter.

Download the latest version of plug-in from here

- Create a new Test Plan in JMeter and add a Thread Group.

- Add a gRPC Sampler to the Thread Group. To do this, right-click on the Thread Group and select "Add > Sampler > gRPC Sampler".

- Configure the gRPC Sampler. You will need to provide the following information:

Server address: The address of the gRPC server you want to test.

Service name: The name of the gRPC service you want to test.

Method name: The name of the method you want to call.

Request data: The data you want to send to the gRPC server.

Proto Root Directory: It is the Root Directory path (till protoc only)

Library Directory: This is the library directory path if any( this is optional)

Full method: this should be auto populated when you click on the Listing Button

Optional configuration: Authorization details if any

Send JSON format with the Request: Your JSON request

- Add any necessary configuration elements or assertions to the Test Plan.

- Run the Test Plan and analyze the results.

what is gRPC?

The gRPC framework is a powerful tool for developing APIs that can handle a high volume of traffic while maintaining performance. It is widely adopted by leading companies to support various use cases, ranging from microservices to web, mobile, and IoT applications.

One of the key features of gRPC is its use of Protobuf (Protocol Buffers) as an Interface Definition Language (IDL) to define data structures and function contracts in a structured format using .proto files. This makes it easier to create and manage APIs while ensuring consistency and compatibility across different platforms and languages.

gRPC uses the Protocol Buffers data format for serializing structured data over the network. It supports multiple programming languages, including C++, Java, Python, Ruby, Go, Node.js, C#, and Objective-C.

gRPC provides a simple and efficient way to define remote procedures and their parameters using an Interface Definition Language (IDL). It generates client and server code automatically based on this IDL specification. The generated code handles all the networking details, allowing developers to focus on the business logic of their applications.

One of the key benefits of gRPC is its high performance. It uses HTTP/2 as its transport protocol, which provides features such as multiplexing, flow control, and header compression, resulting in lower latency and reduced bandwidth usage. Additionally, gRPC supports bi-directional streaming and flow control, making it suitable for building real-time applications.

Wednesday 5 April 2023

gRPC request testing using Vugen Load runner | gRPC performance testing load runner

Start by creating a new VuGen script and selecting the "gRPC" protocol under "Web Services" in the protocol selection window.

In the "Vuser_init" section of the script, add the following code to create a gRPC channel and stub

- Finally, in the "Vuser_end" section of the script, add the code to clean up the gRPC channel and stub:

Sunday 19 March 2023

'npm' is not recognised as an internal or external command operable program or batch file error windows 10 fix

- Install Node.js: Download and install the latest version of Node.js from the official website https://nodejs.org/. Follow the installation instructions for your operating system.

- Verify Node.js installation: After the installation is complete, open a new terminal window and run the following command to verify that Node.js is installed:

- Verify npm installation: Run the following command to verify that npm is installed:

Saturday 18 March 2023

How to read contents of a text file through LoadRunner and make alterations to that text file | Read text file with load runner

Friday 17 March 2023

Capture dynamic data and write it to an external file in LoadRunner

Action()

{

char *dynamic_data;

web_reg_save_param("dynamic_data", "LB=<start>", "RB=<end>", LAST);

// Make the request that returns the dynamic data

web_url("example.com", "URL=http://example.com/", "Resource=0", "RecContentType=text/html", "Referer=", "Snapshot=t1.inf", "Mode=HTML", LAST);

// Retrieve the captured dynamic data

dynamic_data = lr_eval_string("{dynamic_data}");

// Write the captured data to a file

FILE *fp = fopen("output.txt", "w"); // Replace "output.txt" with your desired file name

if (fp != NULL)

{

fputs(dynamic_data, fp);

fclose(fp);

}

else

{

lr_error_message("Failed to open file for writing.");

}

return 0;

}

Here the web_reg_save_param will capture the dynamic data between the specified left and right boundaries, and store it in a parameter named "dynamic_data". Then, we make a request that returns the dynamic data, causing it to be captured and saved in the parameter. Next, we retrieve the captured dynamic data using lr_eval_string and store it in the dynamic_data variable. Finally, we use standard C file I/O functions to open a file named "output.txt" for writing, write the dynamic data to the file using fputs, and then close the file using fclose.

Resolving Performance Testing Issues with GWT | Google Web Toolkit in Performance Testing

If you're experiencing issues with GWT (Google Web Toolkit) in your performance testing, don't worry! There are a few steps you can take to solve these problems and improve your testing results.

First, ensure that you have the right version of the GWT Developer Plugin installed on your browser. If you're using an outdated version, you may encounter issues when recording your scripts.

Next, check your script for any errors or issues that may be causing problems with GWT. One common issue is the failure to properly handle asynchronous calls. To fix this, use the GWT synchronizer function to ensure that all asynchronous calls are completed before moving on to the next step in your script. You may also want to adjust your script's think time, or the amount of time between steps, to more accurately reflect real-world user behavior. GWT applications often have a high level of interactivity, so increasing think time can help ensure accurate test results.

Finally, check your server configuration to ensure that it can handle the load generated by your performance testing. This includes monitoring your CPU and memory usage, as well as ensuring that your server is properly configured to handle multiple simultaneous requests.

By following these steps, you should be able to resolve any issues you're experiencing with GWT in your performance testing and improve the accuracy of your test results.

Thursday 16 March 2023

java.lang.OutOfMemoryError | JVM issues

To solve this issue, there are several options:

- Increase the heap space: The heap space is where Java stores its objects and data structures. You can increase the heap space by adding the "-Xmx" flag to the command line when running the Java program. For example, "java -Xmx2g MyProgram" would allocate 2GB of heap space to the program.

- Reduce memory usage: You can also reduce the memory usage of your Java program by optimizing your code and avoiding unnecessary object creation. For example, you can use primitive data types instead of object wrappers, and reuse objects instead of creating new ones.

- Use a memory profiler: A memory profiler can help you identify memory leaks and other memory usage issues in your Java program. You can use tools like VisualVM, Eclipse MAT, or YourKit to analyze the memory usage of your program and identify areas for optimization.

- Upgrade your hardware: If you are running out of physical memory, you can upgrade your hardware to increase the amount of available memory. You can also consider using a cloud-based infrastructure that allows you to scale up or down your resources as needed.