2. Configure Tosca:

- Open Tosca and navigate to `ExecutionLists`. This is where you manage your test execution configurations.

- Create a new ExecutionList or open an existing one where you want to integrate Neoload.

- Add the test cases you want to execute with Neoload to this ExecutionList.

3. Install Neoload Integration Pack:

- Download the Neoload Integration Pack from the Tricentis website. This pack provides the necessary components to integrate Tosca with Neoload.

- Follow the installation instructions provided with the Integration Pack to install it on your machine.

4. Configure Neoload Integration in Tosca:

- In Tosca, navigate to `Settings > Options > Execution > Neoload`. This is where you configure the Neoload integration settings.

- Provide the path to the Neoload executable. This allows Tosca to invoke Neoload during test execution.

- Configure Neoload settings such as the project name, scenario name, and runtime parameters. These settings determine how Neoload executes the load test.

5. Configure Neoload Integration in Neoload:

- Open Neoload and navigate to `Preferences > Projects`. This is where you configure Neoload's integration settings with external tools like Tosca.

- Enable the Tosca integration and provide the path to the Tosca executable. This allows Neoload to communicate with Tosca during test execution.

6. Define Neoload Integration in Tosca ExecutionList:

- In your Tosca ExecutionList, select the Neoload integration as the execution type. This tells Tosca to use Neoload for load testing.

- Configure Neoload-specific settings such as the test duration, load policy, and other parameters relevant to your performance testing requirements.

7. Run the Tosca ExecutionList with Neoload Integration:

- Execute the Tosca ExecutionList as you normally would. Tosca will now trigger Neoload to execute the load test based on the defined settings.

- During execution, Tosca will communicate with Neoload to start and monitor the load test.

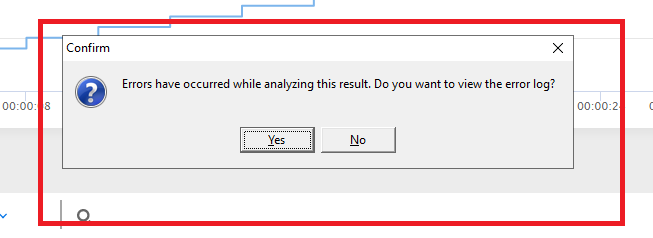

8. Analyze Results:

- Once the load test execution is complete, analyze the results in both Tosca and Neoload.

- Use Tosca to review functional test results and Neoload to analyze performance metrics such as response times, throughput, and server resource utilization.

- Identify any performance issues or bottlenecks and take appropriate actions to address them.

By following these steps, you can effectively integrate Tosca with Neoload to perform combined functional and performance testing.